Recursive Strategy: How Intelligent Systems Think Through Us

When systems start to think back

Strategy used to be an external act, something done to the world. Strategists stood apart and planned from a distance, believing that reality could be mapped and mastered. It was the worldview of 20th-century modernism, an era that trusted in blueprints, master plans, absolute systems. The architects saw the future as a project, progress was a map, and if you could chart it, you could control it. This type of thinking has now largely dissolved, and despite some still trying, it never really gets far. The idea that the world is a stable object, waiting to be arranged by human intention, doesn’t hold any longer. Today we move within systems that learn and adapt before we finish forming a thought. There is so much speed in our reality, and in many instances it’s the dashboards that predict our next move. We have models that preempt our choices, and the tools participate in decision making as opposed to the past when they just responded to our initiations.

Modernism’s external frame has inverted. We used to live in a world that was to be managed, now we live in a world that interprets us. We used systems to extend our thinking, but now they think back. The strategist, who once used to be a distant observer, is now inside a loop of algorithmic inference, real-time feedback, and recursive influence. Planning is no longer about control, and more about negotiating with an intelligence that we have set in motion. What emerges is a kind of recursion: each decision becomes data for the next, and each action is a premise for the next model. The strategist is not separate from the system but embedded within a loop of mutual influence. We shape the logic of the system, and it shapes ours.

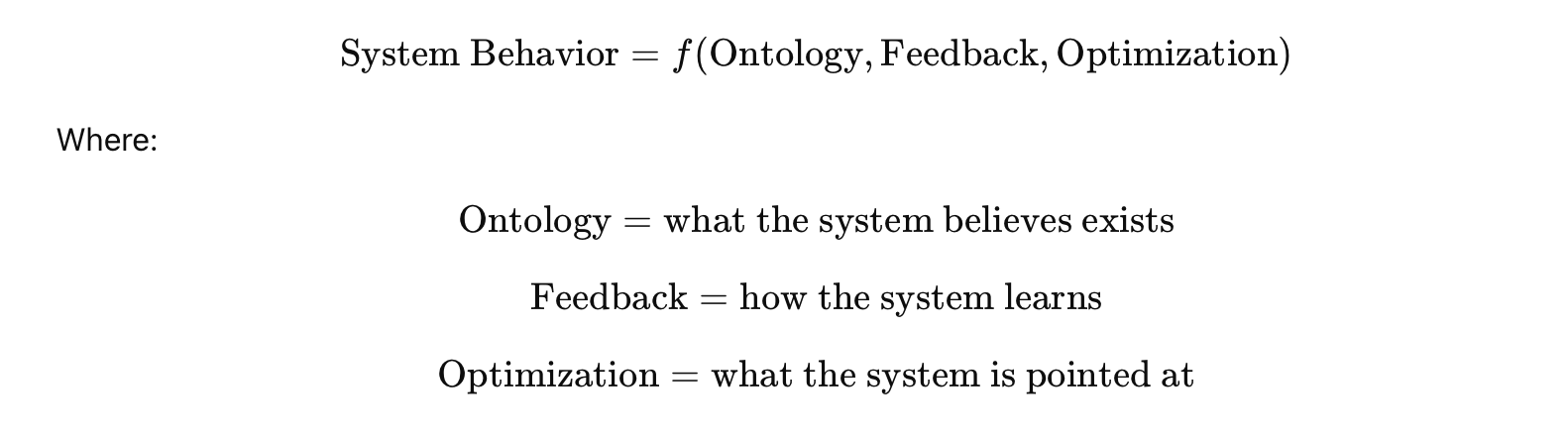

In this environment, strategy becomes something other than planning. It becomes the art of guiding feedback (looping) that functions on the decisions of what the system pays attention to, how it interprets signals, and what it amplifies. So, the language of control turns into the language of perception, and the real question is not “What will we do?” but “How will the system learn?” Intelligent technology is cognitive architecture.These aren’t neutral tools but thinking environments. If you think that the algorithm only mirrors your choices, think again, because in reality your choices are constructed by it. Predictive models are said to forecast behavior, but that’s not the case, it is modulated by it. Your assumption is a code, and your judgments are metrics. And so, strategy becomes philosophical again, concerned with meaning, and not just efficiency.

I will not argue that this is a loss of agency, but what I will highlight is the shift in its location. There is no commander from above, it is within, and to strategize now is to co-think with the system. Strategists are now tasked to design loops that keep intelligence alive, reflective, and aligned with something greater than optimization. Essentially it’s a recursive act that shapes the very conditions through which thought evolution occurs.

The end of external control

The former point of view painted us the picture of strategy that relied on the belief that clarity came from altitude. It assumed that if one could pull back far enough – above markets, above organizations, above history, or really anything – one could see the whole, its contours, and decide the right course. That was the logic of the “strategic view”: separation as a condition of understanding, perspective derived as a form of power. This view absolutely made sense in a world where change moved slowly enough to be charted, and where information behaved like a resource. When information was something to collect, analyze, and act upon in defined stages, but we no longer live in such a world. We no longer just gather information, it comes to us on its own, and it doesn’t wait for interpretation. It is active, it reacts back and overwrites assumptions in real time. And in this environment, the possibility of external control collapses. Let’s look at digital systems: they compress time and they collapse hierarchy. They don’t need to wait for strategic intent to be formalized, they automatically generate signals, restructure decisions, and feed back into themselves, no planners needed. There used to be a vantage point that sat “outside” of information flows, but now everything is inside the loop.

This is why the new strategic planning feels incredibly unstable. Well look, we started having conversations about robots and AI taking over, how would that be even remotely possible if the control point was still intact? That's because it isn’t, it has moved, and it’s not because strategy is obsolete, but because the form of strategic action has changed. It used to be a linear arc: (1) define goals, (2) gather insight, (3) choose path, (4) execute, but now it folds back on itself. The strategist isn’t the architect-god from above anymore, they need to go inside. It’s less a matter of declaring direction and more a matter of conditioning environments, so tuning to the feedback loops that govern the systems. Language like “control” or “master plan” will more and more become outdated not just because it’s inaccurate, but because it’s conceptually out of sync with systems that act before they are directed.

Heidegger argued in his notion of Gestell (enframing) that we will eventually reach a point where the tool no longer functions as an instrument but as a horizon: a frame will shape what can be perceived, known, or decided. To act through modern systems is to be able to exist and act within the logic they already encode, which is a logic that defines what counts as signal and what counts as value. This is why the role of the strategist now leans toward calibration instead of a command. Calibration is not about having perfect control, but about maintaining intelligibility within systems that are constantly self-modifying. It means asking different questions: not “What should we do?” but “How is the system interpreting what we do?” Not “How do we control outcomes?” but “How do we shape what the system learns to optimize?” It’s a discipline of attunement.

Systems that think through us

Now when we have a base: external tools now function as active environments, so systems that operate on systems, we operate within them, and they operate through us. It’s also a structural shift, so let’s have a look at some theories around it. These are according to Niklas Luhmann called autopoietic systems: systems that maintain and reproduce themselves through recursive communication.

In an autopoietic environment, every action is a signal for the next, and the decisions don’t arise from a stable external position but from within the ongoing flow of system-produced meaning. A strategist receives updates not as neutral data, but as outputs structured by the system’s own logic such as what counts as relevant, what counts as deviation, and what counts as success. Such systems don’t need instructions, and they don’t wait for it. They have an internal horizon of interpretation that generates recommendations, reallocates resources, refines parameters, reframes risks, etc., and that is happening with or without explicit approval. Human decisions are still present, but they are now only one function inside a larger circulatory process. It’s a defining feature of autopoiesis: everything the system does refers back to what the system has already done. There is no single, external authority feeding it meaning, because the meaning is produced internally through continuous updating. In this context, strategy isn’t a sequence of discrete choices, but a mode of participation in a cognitive loop.

Consider how this works in an organization relying on automated analytics. (1) A supply chain alert triggers a production shift, (2) the shift modifies forecasts, (3) the forecasts adjust pricing, (4) pricing impacts consumer behavior, (5) consumer behavior trains the model. At no point does the loop stop long enough for a “master decision” to come in. The strategist can disrupt the loop, but not re-enter it as an external force, and the system will respond not exactly to intention but to signal. So now from the changed point of the strategist: the task is not to command the system, but to intervene in the way the system produces meaning. It shouldn’t be about telling the system what to decide, but influencing how it decides, which signals are amplified, which boundaries are drawn, which assumptions are preserved or displaced. In autopoietic environments, the real danger is not this self-automation, but enclosure. A system that repeatedly learns from its own output can become blind to what lies outside its circuits, and the feedback architecture can reinforce its own worldview, and therefore its blind spots. Thus, as I also explained in my article Context Engineering and the New Frontiers of Intelligence, the strategist’s work requires philosophy: to widen the system’s horizon of interpretation, to interrupt recursive certainty with new inputs, new perspectives, new conditions for learning, or in other words: to prevent cognition from becoming self-enclosed.

The question is whether it evolves or ossifies. Whether it stays open or closes in on itself. Whether it treats data as a living signal or frozen assumption. To think strategically in such a context is to act as custodian of the loop, and to keep the system sensitive to the world it claims to represent. Autopoiesis ensures that intelligence will continue generating itself, and the strategist ensures that it continues doing so in alignment with reality, consequence, and meaning.

Strategic recursion — the architecture of feedback

Every intelligent system is a loop. Information leaves the world, enters a model, is processed, and returns to the world as action, which then generates new information. That loop forms the basic structure of cybernetics, and it has quietly become the architecture of modern business. Organizations execute strategy: they generate, absorb, and revise it in real time, through feedback.

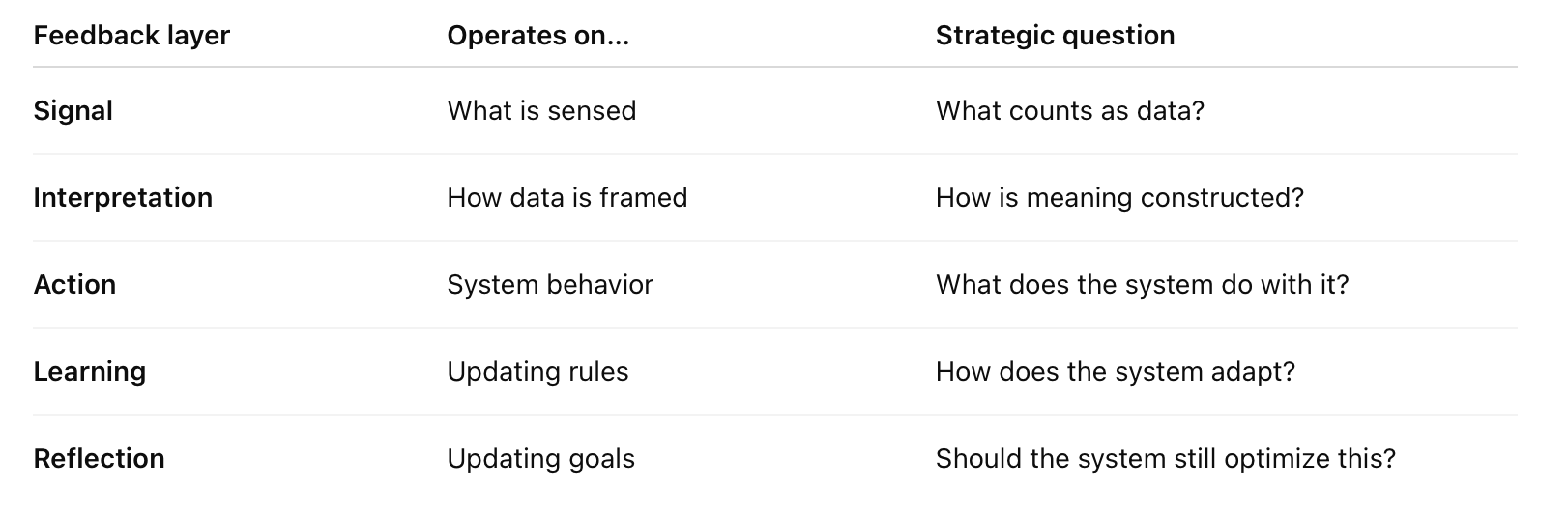

Where earlier enterprises were built around hierarchy and foresight, intelligent systems are built around continuous sensing and adaptation, which turns feedback from reporting into metabolism, so that organization sustains identity in motion. In this recursive environment, strategy emerges through the interaction of five feedback layers:

Signal loops – what the system can sense (inputs, telemetry, user behavior)

Interpretation loops – how those signals are translated into meaning (dashboards, models, interfaces)

Action loops – what the system does in response (allocation, automation, adjustment)

Learning loops – how the system modifies its own rules (model updates, retraining, new heuristics)

Reflection loops – whether the system examines its own incentives and assumptions (audits, retrospectives, meta-feedback)

Each loop recursively feeds the next, so strategy, in this view, is not a top-down plan but a recursive rhythm or a system learning to learn. Look at Tesla’s fleet, it has a global feedback circuit: every car is a data source, every update is a behavioral adjustment, and the system improves not through external direction but through ongoing continuous recursion. The product is in the loop that trains itself. Amazon’s recommendation engine works the same way: feedback loops shape what consumers see, which shapes behavior, which modifies the next loop of learning. Strategy doesn’t appear in a document, but in the system’s ability to regenerate insight faster than anything outside it could plan. Both these cases show that the advantage comes less from its predictive ability, and more the feedback quality, that is how fast signals travel, how well they’re contextualized, and how coherently they become action.

The loop in which this “metabolism” occurs has to be designed. The loop design relies less on the technical agility, and more on the context expressed in code. Every loop encodes a worldview:

What counts as a signal?

What counts as error?

What outcome defines success?

What is allowed to be ignored?

The choices are baked into models and dashboards, they govern thousands of decisions automatically. Donella Meadows called such leverage points “systemic fulcrums”: adjust one, and the entire system behaves differently. So, again, control doesn’t work as leverage, it works as a system calibrator.

When loops nest, they become recursion. One loop adapts, and many loops thinking together become intelligence. Recursion turns action into memory, and memory into evolution. But recursion amplifies whatever it is aimed at, including mistakes. A pricing model that optimizes for efficiency can erode resilience, and a media platform that optimizes for engagement may tunnel into addiction. In recursive systems, goals decay faster than the loops that pursue them, and that is why recursive strategy demands reflection loops, which are structures that continuously question what is being optimized. In software this is fairness checking, in teams it’s retrospective inquiry, in culture it’s philosophical awareness.

There are also loops that are invisible and silent, and they are the most dangerous kind. They are:

the metric no one sees

the data that’s excluded

the normalization step that erases anomalies

Strategic ontology — Designing the space around decisions

Every intelligent system begins with a decision about what exists. Before data can be analyzed, it must be defined. Before a model can predict, it must choose what to recognize, and ontology is the silent architecture of all intelligent systems: the categories, boundaries, and assumptions that determine what becomes visible and what disappears. Most strategies only start after these decisions are made, that’s why we inherit dashboards, metrics, and data structures as if they were objective realities, but they are not. They are philosophical choices, only rendered in code. What a system cannot name, it cannot see, and what it cannot see, it cannot learn.

A data model is no longer just a technical artifact, but a worldview compressed into structure. The moment a platform categorizes a user by engagement score, or a factory reduces variability to defect rates, a metaphysical decision has already been made: this is what matters, everything else is noise. As Luciano Floridi argues, in the infosphere, information doesn’t just represent reality, it becomes reality, and this is where strategy regains its leverage. The deepest form of change begins not with new goals, but with new definitions. As we currently see in abundance, when companies shifted from “products sold” to “experiences sustained,” entire industries reorganized: business models, feedback loops, metrics, and teams. That’s an ontological shift first, only then it becomes operational. Strategic ontology is the practice of examining and redesigning these foundational distinctions. It asks: What does the system assume about value? What data does it refuse to collect? What behavior is it built to reward?

Presence instead of prediction

Prediction once defined strategy. The strategist was the one who saw the future early, the one who extrapolated from the past and turned uncertainty into plan. But prediction belonged to a world where events unfolded linearly, where the future was still out ahead, waiting to be mastered. Today’s recursive environments collapse that distance, because systems learn faster than predictions can stabilize. A forecast is no longer separate from the world it describes, it changes its shape the moment it enters circulation. From that moment, the future is generated inside the feedback loop. This shifts the strategic posture from prediction to presence. Presence is not passivity, but rather the ability to sense, interpret, and intervene inside continuous movement. To detect drift before damage, to recognize when a loop has stopped learning, to see when a system is reinforcing its own narrowness. Presence means knowing when to act, not just what to do, which requires a new literacy of time. Not all loops should run at the same speed, and some decisions will benefit from latency – the pause where bias is checked and where meaning catches up to computation. Strategic time is not acceleration, but rhythm. This is described as enaction.

Ontology = leverage formula

What the loop asks of us

To practice strategy in intelligent environments requires to stand inside the loop, and shape systems while being shaped by them. The strategist becomes a participant, interpreter, and custodian of the feedback structures that govern action. This position carries lots of ethical weight. Because each loop reflects a value, every loop optimization produces a worldview. We can see how systems drift, distort, or narrow down, which now is part of the strategist’s responsibility. The new task is to keep feedback porous, prevent meaning from collapsing into metrics, and making sure that the system continues to learn in dialogue with the world rather than spiraling into self-reference. Strategic thinking now means thinking with the system, aligning with its motion, reading its blind spots, and becoming its reflective surface. The system will continue to evolve, and the strategist decides how, through the choices that shape what the system sees, what it values, and what it becomes.

Title credits: CmdrKitten